Training An AI Eye On Exploration Of The Moon

Eddie Gonzales Jr. – MessageToEagle.com – A Moon-scanning method that can automatically classify important lunar features from telescope images could significantly improve the efficiency of selecting sites for exploration.

However, scanning by eye across such a large area, looking for features perhaps a few hundred meters across, is laborious and often inaccurate, which makes it difficult to pick optimal areas for exploration.

Siyuan Chen, Xin Gao and Shuyu Sun, along with colleagues from The Chinese University of Hong Kong, have now applied machine learning and artificial intelligence (AI) to automate the identification of prospective lunar landing and exploration areas.

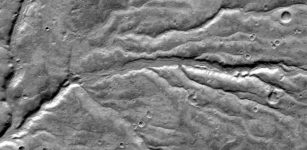

“We are looking for lunar features like craters and rilles, which are thought to be hotspots for energy resources like uranium and helium-3 — a promising resource for nuclear fusion,” says Chen. “Both have been detected in Moon craters and could be useful resources for replenishing spacecraft fuel.”

Moon – Image credit: NASA/GSFC/Arizona State University

Moon – Image credit: NASA/GSFC/Arizona State University

Machine learning is a very effective technique for training an AI model to look for certain features on its own. The first problem faced by Chen and his colleagues was that there was no labeled dataset for rilles that could be used to train their model.

“We overcame this challenge by constructing our own training dataset with annotations for both craters and rilles,” says Chen. “To do this, we used an approach called transfer learning to pretrain our rille model on a surface crack dataset with some fine-tuning using actual rille masks. Previous approaches require manual annotation for at least part of the input images –our approach does not require human intervention and so allowed us to construct a large-high-quality dataset.”

The next challenge was developing a computational approach that could be used to identify both craters and rilles at the same time, something that had not been done before.

“This is a pixel-to-pixel problem for which we need to accurately mask the craters and rilles in a lunar image,” says Chen.

“We solved this problem by constructing a deep learning framework called high-resolution-moon-net, which has two independent networks that share the same network architecture to identify craters and rilles simultaneously.”

The team’s approach achieved precision as high as 83.7 percent, higher than existing state-of-the-art methods for crater detection.

Written by Eddie Gonzales Jr. MessageToEagle.com Staff