A New Camera Can Image Objects As Close As 3 Centimeters And Far Away As 1.7 Kilometers

Eddie Gonzales Jr. – MessageToEagle.com – Five hundred million years ago, the oceans teemed with trillions of trilobites—creatures that were distant cousins of horseshoe crabs.

All trilobites had a wide range of vision, thanks to compound eyes—single eyes composed of tens to thousands of tiny independent units, each with their own cornea, lens and light-sensitive cells. But one group, Dalmanitina socialis, was exceptionally farsighted.

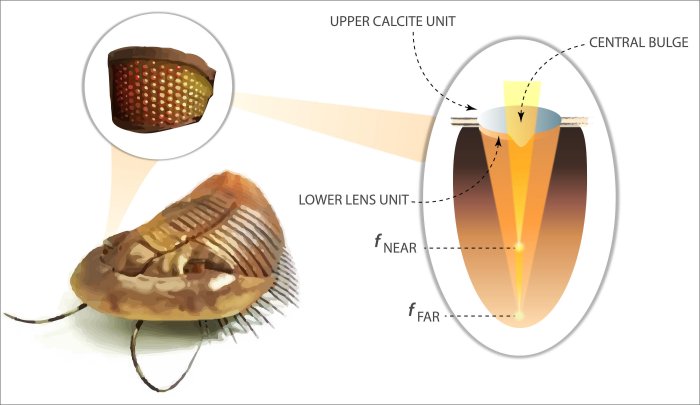

Inspired by the compound eyes of a species of trilobite, researchers at NIST developed a metalens that can simultaneously image objects both near and far. This illustration shows the structure of the lens of an extinct trilobite. Credit: NIST

Their bifocal eyes, each mounted on stalks and composed of two lenses that bent light at different angles, enabled these sea creatures to simultaneously view prey floating nearby as well as distant enemies approaching from more than a kilometer away.

Inspired by the eyes of D. socialis, researchers at the National Institute of Standards and Technology (NIST) have developed a miniature camera featuring a bifocal lens with a record-setting depth of field—the distance over which the camera can produce sharp images in a single photo. The camera can simultaneously image objects as close as 3 centimeters and as far away as 1.7 kilometers. They devised a computer algorithm to correct for aberrations, sharpen objects at intermediate distances between these near and far focal lengths and generate a final all-in-focus image covering this enormous depth of field.

Such lightweight, large-depth-of-field cameras, which integrate photonic technology at the nanometer scale with software-driven photography, promise to revolutionize future high-resolution imaging systems. In particular, the cameras would greatly boost the capacity to produce highly detailed images of cityscapes, groups of organisms that occupy a large field of view and other photographic applications in which both near and far objects must be brought into sharp focus.

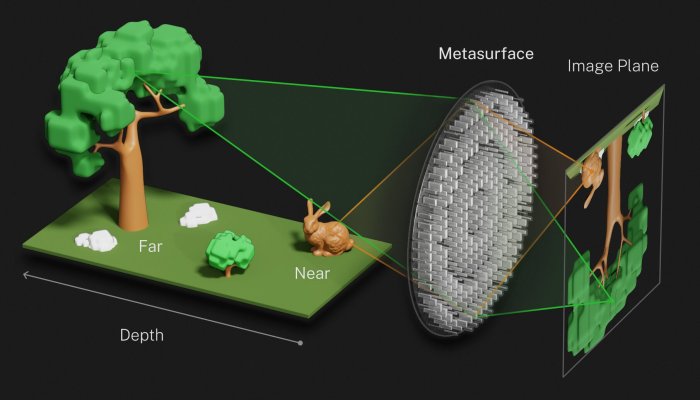

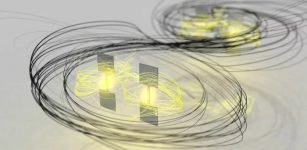

Illustration of how the metalens modeled on the compound lens of a trilobite simultaneously focuses object both near (rabbit) and far (tree). Credit: S. Kelley/NIST

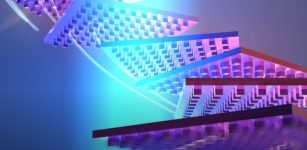

The researchers fabricated an array of tiny lenses known as metalenses. These are ultrathin films etched or imprinted with groupings of nanoscale pillars tailored to manipulate light in specific ways. To design their metalenses, Agrawal and his colleagues studded a flat surface of glass with millions of tiny, rectangular nanometer-scale pillars. The shape and orientation of the constituent nanopillars focused light in such a way that the metasurface simultaneously acted as a macro lens (for close-up objects) and a telephoto lens (for distant ones).

Specifically, the nanopillars captured light from a scene of interest, which can be divided into two equal parts—light that is left circularly polarized and right circularly polarized. (Polarization refers to the direction of the electric field of a light wave; left circularly polarized light has an electric field that rotates counterclockwise, while right circularly polarized light has an electric field that rotates clockwise.)

The nanopillars bent the left and right circularly polarized light by different amounts, depending on the orientation of the nanopillars. The team arranged the nanopillars, which were rectangular, so that some of the incoming light had to travel through the longer part of the rectangle and some through the shorter part. In the longer path, light had to pass through more material and therefore experienced more bending. For the shorter path, the light had less material to travel though and therefore less bending.

The researchers fabricated an array of tiny lenses known as metalenses. These are ultrathin films etched or imprinted with groupings of nanoscale pillars tailored to manipulate light in specific ways. To design their metalenses, Agrawal and his colleagues studded a flat surface of glass with millions of tiny, rectangular nanometer-scale pillars. The shape and orientation of the constituent nanopillars focused light in such a way that the metasurface simultaneously acted as a macro lens (for close-up objects) and a telephoto lens (for distant ones).

Specifically, the nanopillars captured light from a scene of interest, which can be divided into two equal parts—light that is left circularly polarized and right circularly polarized. (Polarization refers to the direction of the electric field of a light wave; left circularly polarized light has an electric field that rotates counterclockwise, while right circularly polarized light has an electric field that rotates clockwise.)

The nanopillars bent the left and right circularly polarized light by different amounts, depending on the orientation of the nanopillars. The team arranged the nanopillars, which were rectangular, so that some of the incoming light had to travel through the longer part of the rectangle and some through the shorter part. In the longer path, light had to pass through more material and therefore experienced more bending. For the shorter path, the light had less material to travel though and therefore less bending.

Light that is bent by different amounts is brought to a different focus. The greater the bending, the closer the light is focused. In this way, depending on whether light traveled through the longer or shorter part of the rectangular nanopillars, the metalens produces images of both distant objects (1.7 kilometers away) and nearby ones (a few centimeters).

Without further processing, however, that would leave objects at intermediate distances (several meters from the camera) unfocused. Agrawal and his colleagues used a neural network—a computer algorithm that mimics the human nervous system—to teach software to recognize and correct for defects such as blurriness and color aberration in the objects that resided midway between the near and far focus of the metalens. The team tested its camera by placing objects of various colors, shapes and sizes at different distances in a scene of interest and applying software correction to generate a final image that was focused and free of aberrations over the entire kilometer range of depth of field.

The metalenses developed by the team boost light-gathering ability without sacrificing image resolution. In addition, because the system automatically corrects for aberrations, it has a high tolerance for error, enabling researchers to use simple, easy to fabricate designs for the miniature lenses, Agrawal said.

Written by Eddie Gonzales Jr. – MessageToEagle.com Staff10

Related Posts

-

Simulation Of Quantum Computing That Can Be Used On A Traditional Computer

No Comments | Sep 6, 2019

Simulation Of Quantum Computing That Can Be Used On A Traditional Computer

No Comments | Sep 6, 2019 -

Neural Net Computing In Water

No Comments | Oct 10, 2022

Neural Net Computing In Water

No Comments | Oct 10, 2022 -

New Way For Objects To Levitate And Be Driven Only By Light

No Comments | Apr 2, 2019

New Way For Objects To Levitate And Be Driven Only By Light

No Comments | Apr 2, 2019 -

What’s Happening Inside A Proton Traveling At The Speed Of Light – A New Theory

No Comments | Oct 7, 2021

What’s Happening Inside A Proton Traveling At The Speed Of Light – A New Theory

No Comments | Oct 7, 2021 -

Hidden Behaviors Of Chemical Reactions In Quantum Realm – Revealed

No Comments | Jan 18, 2022

Hidden Behaviors Of Chemical Reactions In Quantum Realm – Revealed

No Comments | Jan 18, 2022 -

Large Hadron Collider Advances Search For Magnetic Monopole

No Comments | Aug 19, 2024

Large Hadron Collider Advances Search For Magnetic Monopole

No Comments | Aug 19, 2024 -

Quantum Entanglement Between Matter And Light Sent Over 50 Km Of Optical Fiber

No Comments | Sep 1, 2019

Quantum Entanglement Between Matter And Light Sent Over 50 Km Of Optical Fiber

No Comments | Sep 1, 2019 -

Fusion Facility Sets A New World Energy Record

No Comments | Feb 10, 2022

Fusion Facility Sets A New World Energy Record

No Comments | Feb 10, 2022 -

Physicists Shed Light On The Darkness – New Successful Experiment

No Comments | Mar 19, 2022

Physicists Shed Light On The Darkness – New Successful Experiment

No Comments | Mar 19, 2022 -

Could Cold Spot In The Sky Be A Bruise From A Collision With A Parallel Universe?

No Comments | Jun 5, 2017

Could Cold Spot In The Sky Be A Bruise From A Collision With A Parallel Universe?

No Comments | Jun 5, 2017